The Irish Medical Times - How woke is Artificial Intelligence

|

| Let's do this! |

Now imagine a digital database of contacts was created by my neighbour of their friends and family, and those contacts were then migrated to my phone as the basis for my social and business circle. The information would be largely useless, yes I could invite their mother out to a mother’s day brunch and I could use their dentist if my tooth hurt (I’m not suggesting their mother’s company would induce a pain in my face) but the experiences would not be quite the right fit for me. I need my own database of my own contacts or at least the contacts of someone who has had similar life experiences.

There are deficits in the universal application of data results and the bifurcation points in medicine include gender/sex and race. Perhaps this may be a modern taboo, but scientists define male and female as different at a cellular level (that’s not to say people shouldn’t flip the script). It has been proven that women can metablolize drugs differently to men, women expereince different endocrine changes through puberty, pregnancy and menopause. Women also live longer than men and therefore experience more late-effects, Alzeimers, etc, some treatments including certain cancer protocols work better for women than men and vice versa, the differences go on.

Some evangelists of this data gap point to big problems in health today due to the historical white male bias in medical trials; Heart disease and stroke are the leading cause of death and disability worldwide, however, in part due to messaging based on the male experience, it can be harder for a woman to recognise heart issues, and have them diagnosed and treated. Therefore, after a heart event or stroke women face a higher mortality and tend to endure longer hospitalisation. Cardiovascular disease can affect men and women differently in terms of symptoms, risk factors and outcomes. Yet, women have historically been underrepresented even excluded from cardiovascular clinical trials - it has only been mandatory to include them since 1993.

Women’s health decisions are often made on a narrow set of data, we need to be aware of the biometric data divide. But it’s not just a person’s sex that divides, it’s race too. A recent study in immunogenetics out of the University of California showed that different ethnicities have distinct genetic mutations that increase their risk for particular diseases and affect how they respond to medicine. It concluded that African American children neglected by research died from asthma at a rate 10 times higher than white children.

While on the subject of disequilibrium, men are underrepresented in mental health research trials. Inclusivity and diversity across the board is needed, and thankfully, this is happening.

Like a lot of young people my age, I enjoy using wearables (I’m using ‘young’ in the diverse inclusive sense, aka ‘people with their own teeth’ - most of them). As I have a heart condition I am particularly interested in keeping an eye on my heartrate. With the occasional ricochet between 50 and 180 bpms, I wasn’t sure if it was capturing real life (or death), I believed my digital wrist necromancer was playing tricks on me. I opted for a chest monitor when exercising, which offered enhanced accuracy. I found it uncomfortable to wear with dubious readings, probably because of placement. I couldn’t help but wonder if the device would be a more natural fit on a man. Was it originally designed with a male torso in mind? Probably.

I wasn’t the only person to wonder about a better fit for women as there are now smart bras entering the market (or in development). I have yet to try one, but my understanding is a smart bra is a wearable for women, a heart smart bra for example collects data on your cardiovascular health. An augmented comfortable garment using textile sensors around the torso and heart that picks up physiological data which connects with an app on your phone. It monitors heart rate and rhythm, ECGs, respiratory rate, temperature, posture and movement. Through the use of personalized biomarkers it looks for symptoms and triggers for heart issues. The information can be shared with your doctor for early detection and management of problems, and to track the efficacy of therapies. If enough women were to wear the smart bra, new datasets could emerge on female cardiovascular health. An alternative way to crowdsource information instead of the traditional formal invitation-only trials. There are also prototype smart bras to detect early breast cancer and stress.

Inventive AI doesn’t stop at ladies underwear, there are also smart toilets. Not to be indecorous, but these techno lavs monitor the products the patients leave in the bowl and test prostate health, blood sugars, intestinal issues and other ordurous matters. They are called ‘wellness toilets’. I imagine you could link your fancy toilet to your doctor or pharmacy to catch UTIs, etc, perhaps even before you’ve finished in your water closet a script will have been sent to your phone. If we all join the posh privy parade collective problems could be identified at a micro community level such as poor nutrition, diabetes and pathogens (waste water monitoring has already proven to identify Covid hotspots before outbreaks occur).

Lack of diversity in AI will impact us all. Some may see it as hysterics from woke wonk snowflakes, but any form of homogeneity will lead to skewed results and a clinical body of knowledge that is not applicable to swathes of the population. If you are well represented in clinical trials it’s likely you have a loved one who isn’t. It is important for safety reasons that the person taking the drug or receiving the treatment has been sufficiently represented in the testing and analysis. If you throw the net wider there is also an increased chance of discovering a better treatment or approach for all patients.

The lack of inclusion isn’t only down to exclusion, there can also be a reticence to take part in the development stage from certain minority groups due to historical mistrust, which will take time to redress.

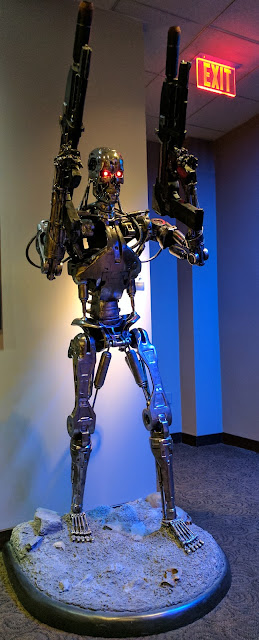

I admit we all have our biases and it is not surprising that they have emerged in AI. Diversity and inclusion should enhance the tech, not make it so generic it loses its purpose. I think it will make for interesting learning curves as inventions created for certain populations are shared globally. For example, the Japanese government has a strategic plan that 4 out of 5 elderly people in need of care will have robot support. Healthcare robots can attend the bedside of a patient suffering from dementia, anxiety or depression and provide compassionate words when they detect tones of stress in the patient's voice. I’m curious how that would translate to an Irish setting, I have visions of a robot bowling around St James’s hospital in Dublin’s inner city skidding up to bedsides with a “Ah jaysus, howaya”, reporting back to the medical staff (in a robot voice) - The.Bleedin.State.Of.Your.One.In.Bed.5 - EXTERMINATE!

I am clearly exposing a robot bias based on a childhood TV show about a doctor (of dubious doctorial credentials) travelling through time in a phone box chased by large angry pepper pots... although, I’ve seen stranger things in St James’s.

Read original article here - How woke is Artificial Intelligence

Comments

Post a Comment